Vision-language modeling is a rapidly growing field in artificial intelligence (AI) that aims to bridge the gap between visual and textual information, allowing machines to understand and process both images and text in a unified manner. This interdisciplinary approach combines the strengths of computer vision, which focuses on image processing, and natural language processing (NLP), which deals with understanding and generating human language. Vision-language models (VLMs) enable machines to comprehend and interact with the world in a more human-like manner, opening up exciting possibilities across various applications, from search engines to autonomous systems.

What is Vision-Language Modeling?

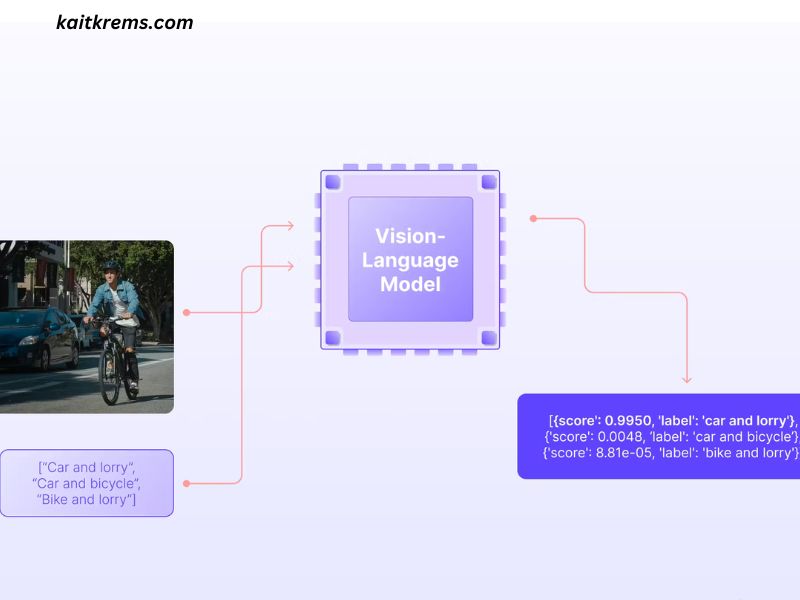

At its core, vision-language modeling is concerned with creating algorithms and systems that can interpret both visual and textual information simultaneously. This is a challenging task as it requires the model to understand how images and text relate to each other. For example, given an image of a cat sitting on a couch, a vision-language model should be able to describe the image using text (“A cat is sitting on a couch”) and, conversely, generate an image from a textual description (“A cat sitting on a couch”).

The ability to integrate information from both modalities is crucial for improving the AI’s ability to understand the world as humans do, where we constantly combine visual and verbal cues. Vision-language modeling is, therefore, a powerful tool for enabling machines to understand not just individual image or text data but how these two forms of data complement each other in various contexts.

Historical Context and Evolution

The concept of combining vision and language can be traced back to early AI research, where the goal was to make machines understand the relationship between words and images. Initially, the approaches were relatively simple, using handcrafted features from images (like color histograms and edge detection) and basic linguistic structures from text (like part-of-speech tagging). However, these early methods struggled to capture the deep, complex relationships between the two modalities.

The breakthrough in vision-language modeling came with the advent of deep learning, particularly convolutional neural networks (CNNs) for image processing and recurrent neural networks (RNNs) for language processing. These advancements allowed for end-to-end learning of both image and text representations from raw data, making the models more efficient and capable of handling real-world complexities.

A pivotal moment came in 2015 when Stanford University introduced a novel model called Visual Semantic Role Labeling (v-SRL). This was one of the first systems to recognize the roles of various objects in an image and match them to corresponding words in a sentence. The model represented a significant leap forward in vision-language modeling, paving the way for later advancements.

In recent years, models like CLIP (Contrastive Language-Image Pretraining) by OpenAI, DALL·E, and Flamingo have pushed the boundaries even further, demonstrating remarkable capabilities in understanding and generating visual content from textual prompts and vice versa. These models, powered by massive datasets and increasingly sophisticated architectures, have made vision-language modeling one of the most exciting areas in AI research today.

Key Concepts in Vision-Language Modeling

- Multimodal Learning

Vision-language models are fundamentally based on the concept of multimodal learning, which refers to training models to process and understand multiple forms of data simultaneously. This is in contrast to traditional AI models, which are typically designed to process a single type of data, such as text or images. By learning from multiple modalities, vision-language models can capture the complex relationships between images and text. - Representation Learning

In vision-language modeling, a major focus is on learning representations of both images and text that can be used for downstream tasks like caption generation, image retrieval, or question answering. This typically involves the use of deep neural networks to map both visual and textual inputs into a common feature space, where similarities and relationships between the two can be measured and leveraged. - Cross-Modal Retrieval

One of the key applications of vision-language models is cross-modal retrieval, where a system can take an image as input and retrieve relevant textual information or vice versa. For example, given an image of a beach, the system might retrieve descriptive captions or articles related to the image. Conversely, given a textual query, such as “A sunset over the mountains,” the model might retrieve images that match the description. - Text-to-Image and Image-to-Text Generation

Another prominent application is the generation of images from textual descriptions (text-to-image) or generating textual descriptions from images (image-to-text). In the text-to-image scenario, a vision-language model could create a realistic image based on a detailed description, such as “A futuristic city skyline at dusk.” On the other hand, in image-to-text generation, the model could automatically generate a description like “A man riding a bicycle through a park.” - Attention Mechanisms

Attention mechanisms, particularly those used in models like transformers, have played a crucial role in improving vision-language models. These mechanisms allow models to focus on specific parts of the input (either the image or the text) when making predictions or generating responses. In a vision-language model, attention can help the system focus on relevant parts of the image while generating a caption or focus on certain words in a sentence when generating an image.

Vision-Language Models and Their Architecture

The architecture of modern vision-language models typically involves a shared neural network structure that processes both images and text. Below are some common approaches:

- Transformer-Based Architectures

Transformers, originally developed for NLP tasks, have become the backbone of most recent vision-language models. The most popular transformer-based vision-language model is CLIP (Contrastive Language-Image Pretraining). CLIP learns to associate images and text by training on a large dataset of image-text pairs. It uses a shared embedding space, where both image and text features are mapped, allowing the model to find relevant images for a given text prompt or generate textual descriptions from images.DALL·E is another famous example, which builds on transformer architectures to generate high-quality images from text descriptions. By leveraging large-scale pretraining, these models are able to generate creative and complex images from a range of textual prompts. - Multimodal Pretraining

Most state-of-the-art vision-language models are pretrained on large-scale multimodal datasets, where the model is exposed to millions of image-text pairs. This pretraining helps the model learn the relationship between images and text in a way that can be fine-tuned for specific downstream tasks such as captioning, retrieval, and question answering. Pretraining on a diverse set of data allows these models to generalize well to a variety of real-world applications. - Contrastive Learning

Many vision-language models use contrastive learning, a technique in which the model is trained to distinguish between matching and non-matching pairs of images and text. For example, a model might be presented with an image and a caption and must learn to associate the two while distinguishing them from irrelevant images and captions. This helps the model learn to recognize which aspects of the image correspond to which parts of the text.

Applications of Vision-Language Models

- Image Captioning

One of the most popular applications of vision-language modeling is automatic image captioning. Given an image, the model generates a descriptive caption that explains the contents of the image in natural language. This is especially useful for accessibility, such as generating captions for visually impaired users. - Visual Question Answering (VQA)

VQA systems use both visual and textual information to answer questions about an image. For instance, a model might be shown an image of a dog and asked, “What color is the dog?” The model would process the image and the question and generate a text-based answer, such as “The dog is brown.” - Image Search Engines

Vision-language models are also transforming search engines. Instead of relying solely on textual keywords, search engines can now use images to refine searches or respond to complex queries. This allows for more accurate and nuanced search results. - Autonomous Systems

Vision-language models are being integrated into autonomous systems, such as self-driving cars and robots, where understanding both visual inputs (like images or video) and textual commands is essential for navigating the environment and interacting with humans. - Content Generation and Creativity

Vision-language models like DALL·E have demonstrated the ability to generate creative content, including art, design, and other visual assets, directly from textual descriptions. This has immense potential in fields like marketing, entertainment, and media.

Challenges and Future Directions

While vision-language modeling has made significant strides, several challenges remain. One of the biggest hurdles is the need for large-scale datasets that pair images with high-quality textual descriptions. Additionally, ensuring that these models can generate accurate and meaningful content in various languages and contexts is still a work in progress.

Looking to the future, advancements in vision-language modeling could enable even more sophisticated applications, including more immersive experiences in virtual and augmented reality, improved human-computer interactions, and better integration of AI in creative industries.

Conclusion

An introduction to vision-language modeling reveals a powerful and transformative area of AI that enables machines to understand and generate both images and text. Through sophisticated architectures and learning techniques, such as transformers and contrastive learning, vision-language models are revolutionizing various industries by bridging the gap between the visual and textual worlds. As research in this field continues to advance, we can expect even more exciting applications and innovations that bring us closer to machines that truly understand the world in the way humans do.